|

I am currently a Research Scientist at ByteDance/Tiktok, working on video synthesis and generation. Previously, I was a Postdoc Fellow supervised by Professor Hao (Richard) Zhang in GrUVi Lab at Simon Fraser University (SFU), Canada. During my postdoc, I worked on 3D Shape Reconstruction and Content Creation. I received my PhD degree from Peking University, where I worked on Computer Vision and Computer Graphics. During my PhD, I was focused on applying deep generative models to analyze and synthesize 2D geometric data (e.g., glyph, font, and layout). I was supervised by Prof. Zhouhui Lian and Prof. Jianguo Xiao. Email / CV / Google Scholar / Github / Twitter |

|

|

|

I have strong interests in shape synthesis and analysis. Specifically, my researching projects cover the following topics: 3D Reconstruction, Font Generation, Layout Generation, Scene Text (Character) Recognition and Detection, Font Recognition, etc. Representative papers are highlighted. |

|

|

Xiaoyan Cong, Haotian Yang, Angtian Wang, Yizhi Wang, Yiding Yang, Canyu Zhang, Chongyang Ma CVPR, 2026 project page / arXiv / code A video editing framework that encodes editing instructions with a vision-language model (VLM) and leverages GRPO to enhance editing performance. |

|

|

Yufan Deng, Yuanyang Yin, Xun Guo, Yizhi Wang, Jacob Zhiyuan Fang, Shenghai Yuan, Yiding Yang, Angtian Wang, Bo Liu, Haibin Huang, Chongyang Ma ICLR, 2026 project page / arXiv / code / video A novel conditioning approach with subject disentanglement for identity-consistent video generation from multiple reference images. |

|

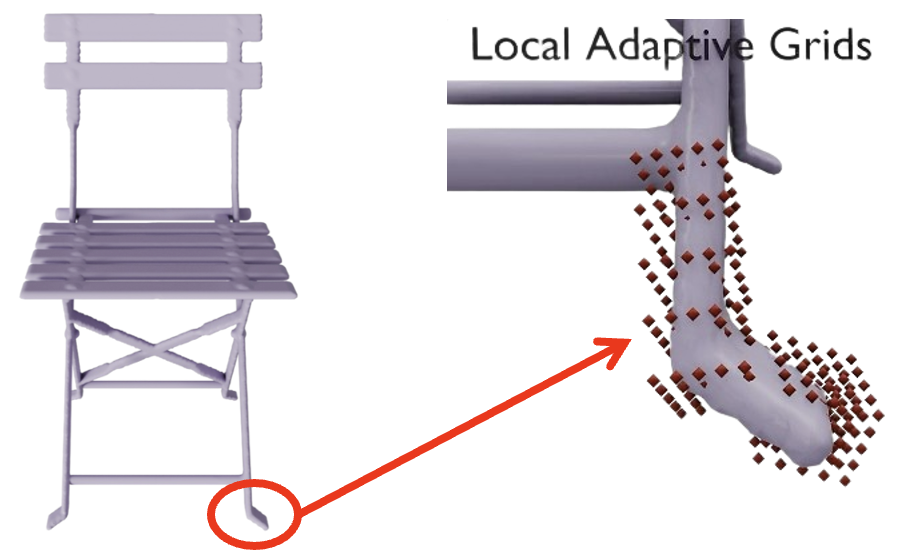

Dingdong Yang, Yizhi Wang, Konrad Schindler, Ali Mahdavi-Amiri, Hao (Richard) Zhang, ICLR, 2025 project page / arXiv / code / video A novel representation of 3D shapes using oriented and anisotropic local grids. |

|

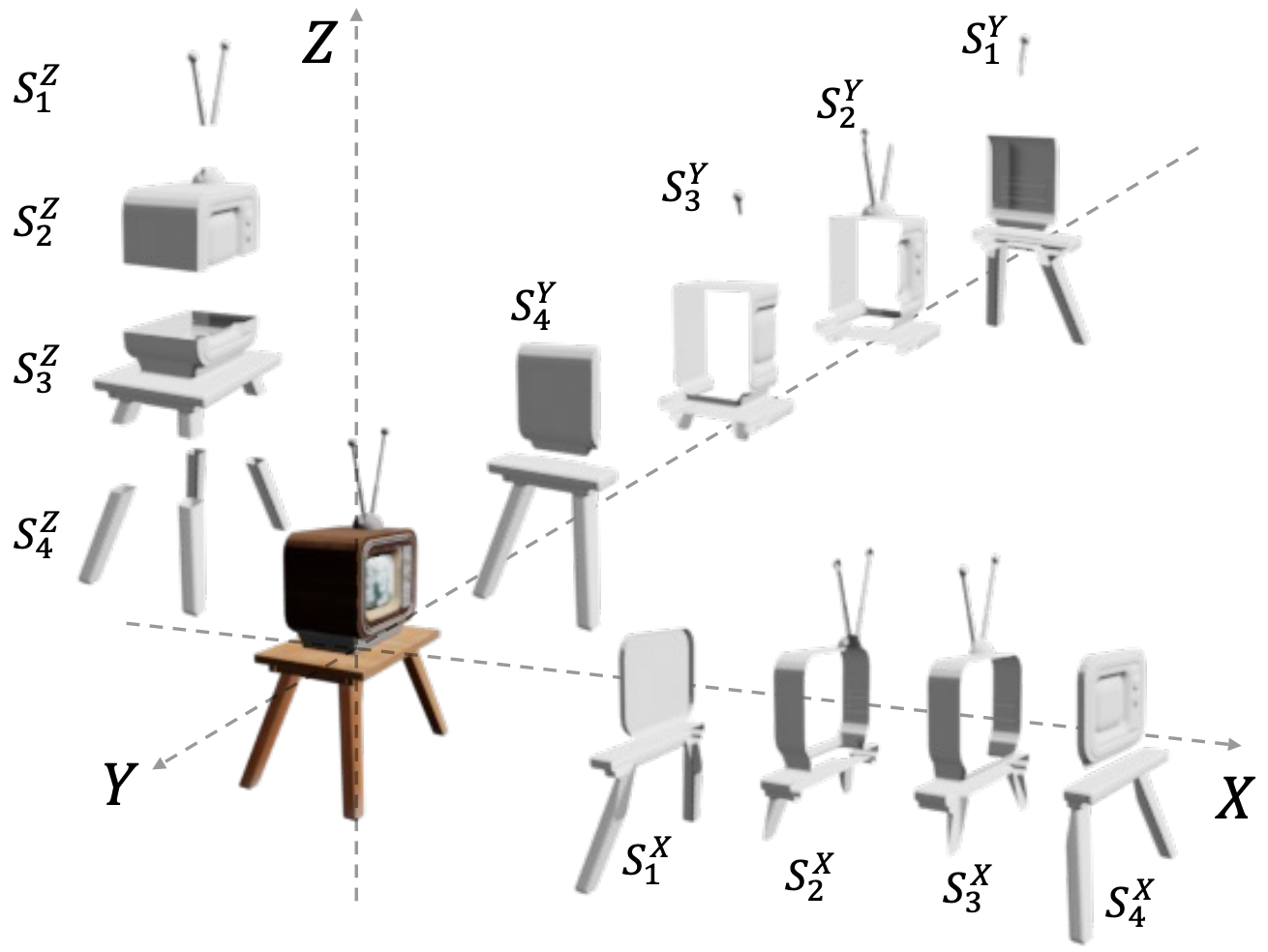

Yizhi Wang, Wallace Lira, Wenqi Wang, Ali Mahdavi-Amiri, Hao (Richard) Zhang, CVPR, 2024 project page / arXiv / code / video Our single-view 3D reconstruction method, Slice3D, predicts multi-slice images to reveal occluded parts without changing the camera (in contrast to multi-view synthesis), and then lifts the slices into a 3D model. |

|

Mingrui Zhao, Yizhi Wang, Fenggen Yu, Changqing Zou, Ali Mahdavi-Amiri, ECCV, 2024 project page / arXiv / code / video A novel approach for shape abstraction via sweep surfaces, which utilize superellipses for profile representation and B-spline curves for the axis. |

|

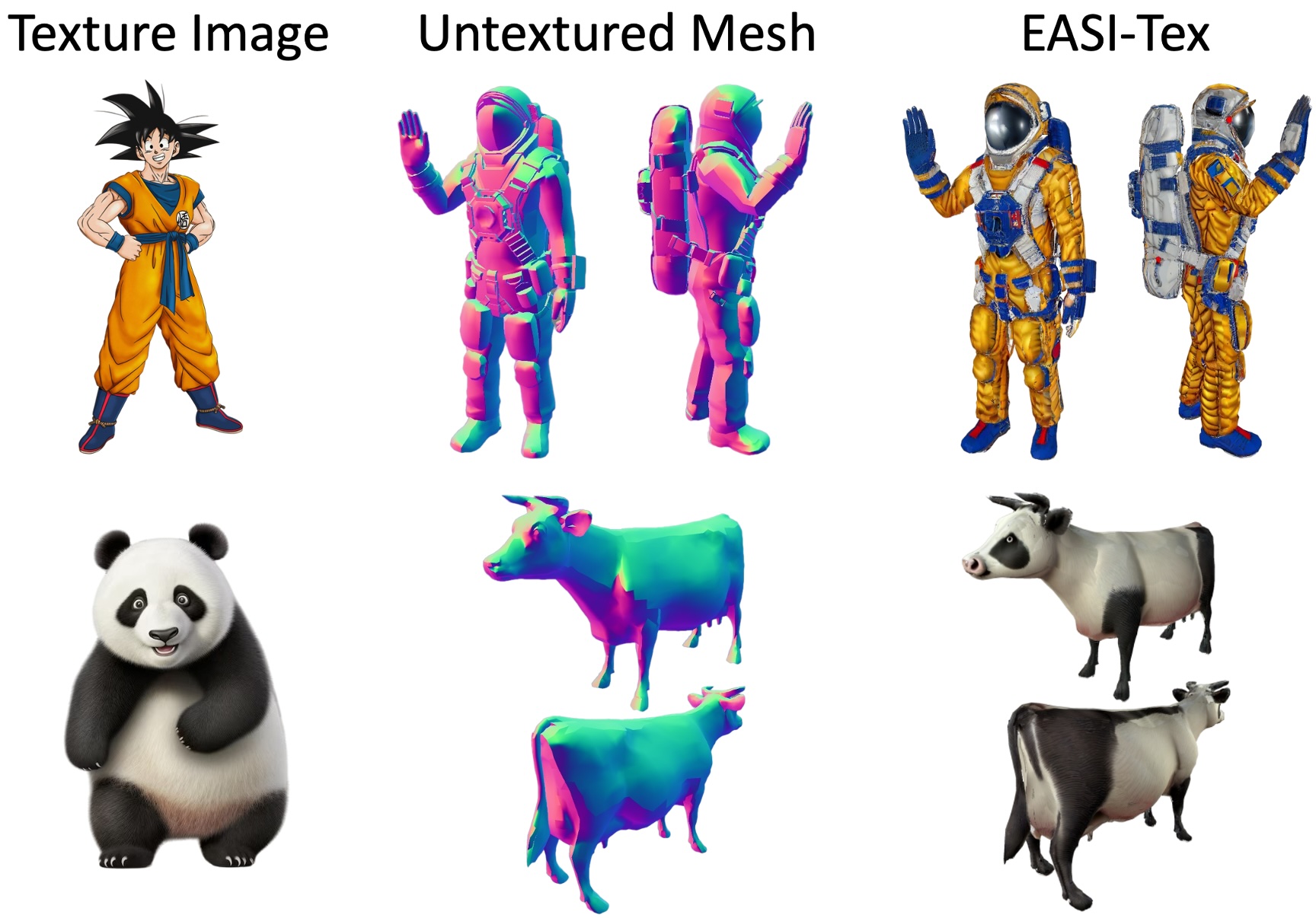

Sai Raj Kishore Perla, Yizhi Wang, Ali Mahdavi-Amiri, Hao (Richard) Zhang, ACM Transactions on Graphics (SIGGRAPH Journal-Track), 2024 project page / arXiv / code / video A novel approach for single-image mesh texturing, which employs diffusion models with judicious conditioning to seamlessly transfer textures. |

|

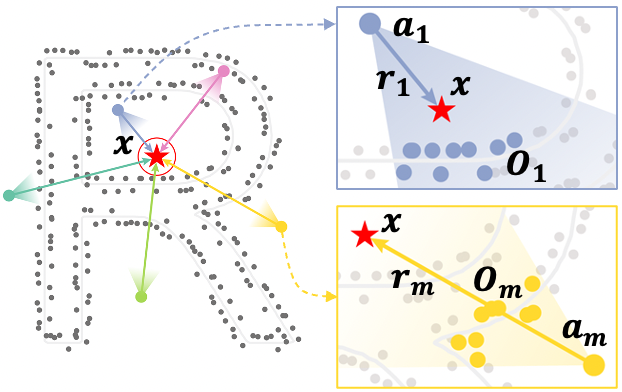

Yizhi Wang*, Zeyu Huang*, Ariel Shamir, Hui Huang, Hao (Richard) Zhang, Ruizhen Hu CVPR, 2023 project page / arXiv / code / video A novel shape encoding for learning neural field representation of shapes that is category-agnostic and generalizable amid significant shape variations. |

|

|

Maham Tanveer, Yizhi Wang, Ali Mahdavi-Amiri, Hao (Richard) Zhang ICCV, 2023 project page / arXiv / code Automatically generate an artistic typography by stylizing one or more letter fonts to visually convey the semantics of an input word. |

|

Dingdong Yang, Yizhi Wang, Ali Mahdavi-Amiri, Hao (Richard) Zhang Arxiv, 2023 project page / arXiv / code A bi-levelfeature representation for an imagecollection, consisting of a per-image latent space on top of a multi-scale feature grid space. |

|

|

Yuqing Wang, Yizhi Wang, Longhui Yu, Yuesheng Zhu, Zhouhui Lian CVPR, 2023 project page / arXiv / code Employing Transformers and a relaxed representation for higher-quality vector font generation (support Chinese glyphs). |

|

|

Yizhi Wang, Guo Pu, Wenhan Luo, Yexin Wang, Pengfei Xiong, Hongwen Kang, Zhouhui Lian CVPR, 2022 project page / arXiv / code A content-aware layout generation network which takes element images and their corresponding content (such as texts) as input and synthesizes aesthetic layouts for them automatically. |

|

|

Yizhi Wang, Zhouhui Lian ACM Transactions on Graphics (SIGGRAPH Asia 2021 Technical Paper), 2021 project page / arXiv / code Directly synthesize vector fonts via dual-modality learning and differentiable rasterization (rendering), instead of vectorizing synthesized glyph images by rule-based methods. |

|

|

Yizhi Wang*, Yue Gao*, Zhouhui Lian ACM Transactions on Graphics (SIGGRAPH 2020 Technical Paper), 2020 project page / arXiv / code Automatically synthesize fonts according to user-specified attributes (such as italic, serif, cursive, and angularity) and their corresponding values. |

|

|

Yizhi Wang, Zhouhui Lian ACM Multimedia, 2020 project page / arXiv / code Address the challenge of font variance in STR and propose a font-independent feature representation method to increase the robustness of STR models. |

|

Yizhi Wang, Zhouhui Lian MMM, 2018 project page / paper / dataset Accurately recognize the font styles of texts in natural images by proposing a novel method based on deep learning and transfer learning. |

|

|

|

|

Merit Student, Peking University. 2018, 2021

Excellent Student, Wangxuan Insitute of Peking University. 2020, 2021 CETC The 14TH Research Institute Glarun Scholarship, Peking University. 2018 Excellent Award, The 17th Programming Contest of Peking University. 2018 |

|

|